Introduction

Front facing classic web servers are very important for SSL termination, proxying to local applications or passing PHP code to PHP FPM processes while also serving static assets directly.

We tend to prefer the venerable Apache due to its familiar config syntax, .htaccess configuration override support (often requested by our customers) and many modules.

Nginx is an old industry standard and top performer for many cases but it's got a somewhat quirky config syntax, no .htaccess support and some features that Apache provides as modules are only available through the paid Nginx Plus version (for instance: bandwidth throttling).

Why use Caddy?

Caddy seems to pop up sometimes in containerized contexts. But Traefik reigns as the master of proxy servers (alongside Nginx) for containers, and for good reasons: it's been build for it and has service autodiscovery plugins.

Otherwise, both Traefik and Caddy are written in Go and rely on the same Go HTTP routines which are battle hardened from their use at Google's and around Kubernetes-related projects.

One simple thing Caddy can do that Traefik can't is serving static files, and quite effectively at that.

In that way it's sitting much closer to Apache than Traefik or Haproxy which are meant to "just" handle proxying and load balancing.

In my opinion, reasons to pick Caddy over traditional web servers are:

- Potentially safer — The Go garbage collector and memory management prevents most memory related exploits;

- No dependencies — the Go compiler usually produces satically linked self sufficient binaries (which is why they're big files) and that means it can be easy to drop onto a relatively old server (you don't have to fight resolving OpenSSL versions woes);

- Configuration is very easy to understand, especially for modern PHP applications;

- Caddy provides an API for dynamic configuration (read and write);

- Immediate HTTP/2 and HTTP/3 support;

- Automatic HTTPS.

About that last point, HTTPS is enabled by default with Caddy and it will attempt to request a Letsencrypt certificate for you automatically and will redirect requests to HTTPS with no extra config needed.

It's like HTTPS and HTTPS redirection is assumed by default. A refreshing change in my opinion.

Why not then?

The more automatic something is, the harder it becomes to obtain something custom for a very specific situation.

For instance, the Caddy directive:

php_fastcgi unix//run/php/php8.2-fpm.sockDoes a good amount of heavy lifting, including redirecting everything to an assumed index.php, which is what most modern PHP apps require. But what if yours doesn't for some reason?

The config suddenly becomes quite larger.

In general, Caddy is great when things are modern and simple and you intend on keeping it that way.

There's also the Go runtime and its garbage collector, they do use more memory than Apache of Nginx though not that much more in my testing, but some people have reported high memory usage due to either bugs or very heavy traffic (traffic that is much higher than any reasonable PHP server can output - We're talking very high amount of requests on static files or quick proxy responses).

A garbage collector effectively prevents unexpected memory leaks from memory allocation bugs but won't prevent a program from using all of the system memory due to a design mistake, which in effect looks like a memory leak — and some library could be the culprit instead of the main program.

This is just to point out garbage collectors do not grant immunity to memory related problems but they are safer for sure.

Lastly, you have to be vigilant about possible hurdles like HTTPS certification not working due to DNS issues (for instance if one of the domains hasn't been renewed).

It's not always clear reading the Caddy logs that the certificate request failed.

There's a bit of a learning curve to carve the right monitoring options around Caddy.

Also somewhat important to keep in mind: the dynamic configuration API is enabled by default on port 2019 listening to localhost only.

This poses a mild security issue as any user with a login on that system will be able to change the web server configuration. It doesn't really matter on containers but may be a big deal on some standalone systems.

Getting started with Caddy

Virtual hosts can be configured in /etc/caddy/Caddyfile.

You just have to create different sections of config directives under "{}" brackets preceded by a comma separated list of hostnames and/or IP address and ports to use.

For instance, here's an example default website:

:80 {

root * /var/www/html

file_server

}

To make sure the config is valid:

caddy validateFor a single hostname PHP modern PHP application (one which uses index.php as fallback resource), we could use:

myphpapplication.net7.be {

root * /var/www/webapp/public

encode zstd gzip

file_server

php_fastcgi unix//run/php/php8.2-fpm.sock

}

With these few lines we get automatic HTTPS immediately enabled with a Letsencrypt certificate, redirect to HTTPS, compression, HTTP/2 and HTTP/3 support.

It's a lot of heavy lifting done by very few lines of config.

To handle domain aliases, we can just have a block for them redirecting to the chosen canonical domain:

www.myphpapplication.net7.be {

redir https://myphpapplication.net7.be{uri}

}

myphpapplication.net7.be {

root * /var/www/webapp/public

encode zstd gzip

file_server

php_fastcgi unix//run/php/php8.2-fpm.sock

}

Now for a reverse proxy example, let's say we're forwarding requests to a .NET web app listening on port 8080:

mydotnetapplication.net7.be {

encode zstd gzip

reverse_proxy :8080

}

And that's it, it will keep the Host header intact by default.

Of course you can go much further, configure logging, load balance between different proxy targets, add HTTP authentication and more.

Rewrites are, in my opinion, much easier to reason about with Caddy as opposed to how obscure Apache's mod_rewrite rules can be.

It's best to check out the documentation for more examples and information.

Some performance comparison

We performed some basic testing of Nginx, Apache and Caddy as installed through the package manager on Debian 12 with very little modifications except disabling logging completely to avoid having to wait for IO as much as possible.

Test setup

- Virtual machine with 2 cores;

- Apache v2.4.57 in mpm_event mode and default modules + the fastCGI module — Configured to start 2 processes;

- Nginx v1.22.1 configured to start 2 processes (was like that by default after install);

- Caddy v2.6.2.

Benchmark program is wrk version 4.1.0. It only uses HTTP/1.1 with no compressions, in HTTP (so SSL) with the following command:

wrk -t2 -c50 -d30s "http://192.168.77.117"Preliminary tests have been made to make sure there are no errors being output for any of the servers under those test conditions (i.e. they are stress-loaded but not completely overloaded).

CPU was at 100% during all the testing, memory was very largely sufficient in all cases.

PHP application test

The PHP code will be handed to PHP FPM with a static amount of processes that can max out the CPU without being overloaded and failing to respond to some requests.

The PHP config is the default one (meaning no opcache or any optimisation whatsoever) and the PHP application is a blank Symfony 6 project, we're just calling the index page (which is in dev mode).

Intuitively, the current stress test should show the same throughput for every web server because we're heavily bottlenecked by PHP but maybe something will show up with memory use or some other metric.

We initially tested on modest systems on the same local network to simulate something close to a real scenario. However, as expected, the results are exactly the same for all servers in that case, we're severely limited by the processor power and IO.

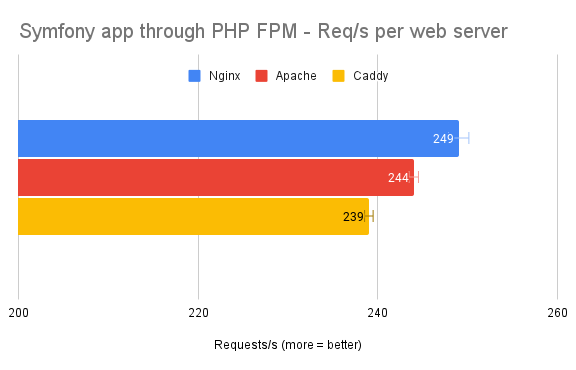

We decided to re-run the tests on virtual machines sporting a much more potent processors (still limited to 2 cores) and got the following results:

There is a minor difference here between the servers that seems to keep appearing through multiple runs.

However, we're talking a few percent differences with processor power that will never be found in any kind of rentable VPS.

As mentioned before, more realistic testing just yields the same throughput for all servers. But there seems to be an advantage for Nginx that might be interesting for extremely fast PHP applications.

Unfortunately even optimized Symfony or Laravel applications aren't that fast.

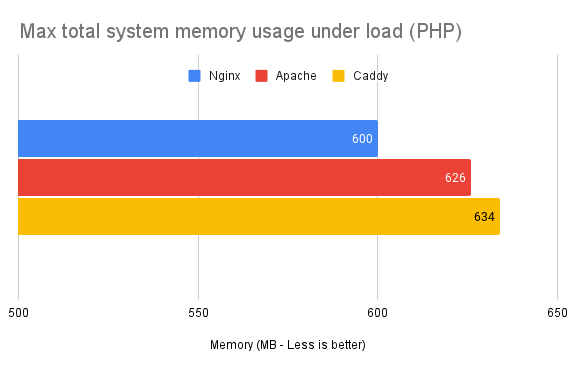

We also measured the maximum memory used by the whole system during the benchmark for each server (doesn't include buffers/cache):

Both Apache and Nginx are spawning 2 processes (we have 2 cores) — Caddy always spawns just the one and uses threads and async IO system calls.

As we can see the difference between Nginx and Caddy is in the range of ~40MB. Nothing too crazy but something to consider when running on extremely constrained environments (thinking 512MB of memory).

The Go gargabe collector seemed to be pretty effective as memory use stayed relatively consistent during the test.

Static content tests

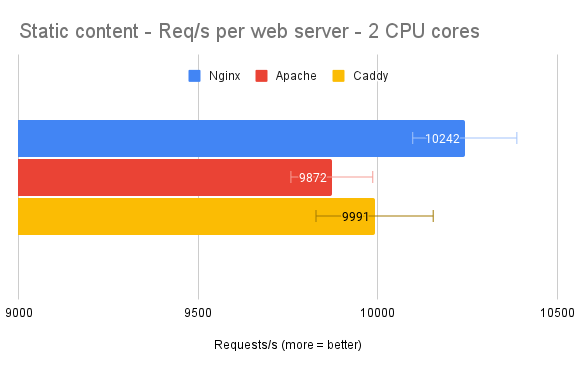

The website configurations are changed to serve a static index.html file and we're back on a modest VPS and generally more realistic environment.

A quick reminder that apart from logging being disabled, the web servers are in their default configuration from the package maintainer on Debian 12.

It's possible we're missing some optimisation that would close the gaps between all the results or make them worse. We're just testing the out of the box behavior.

In this case Apache is ever so slightly behind Caddy and Nginx is again on top but we're again talking about a few percents difference with a rather large standard deviation so be that as it may.

In practice all of the servers pretty much offered the same performance.

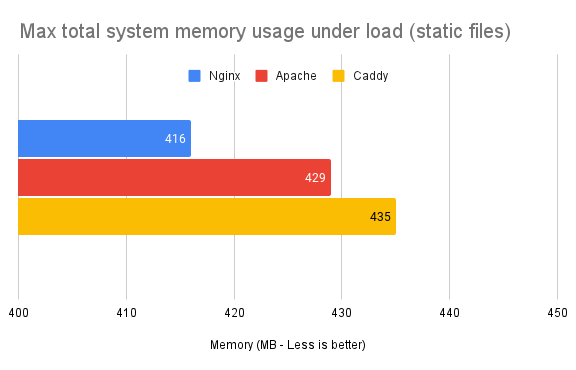

Looking at peak total memory use during the test:

The difference is even smaller than what the PHP FPM test showed.

Caddy is just a couple of MB apart from Apache.

We haven't tested a proper proxy-to-another-web-server configuration but it looks like all three servers deliver pretty much identical performances for static content delivery in realistic conditions.

Granted there is more that could be tested fiddling with scenarios and the size of delivered static content. And there's also the fact wrk just uses basic HTTP/1 with no SSL or compression.

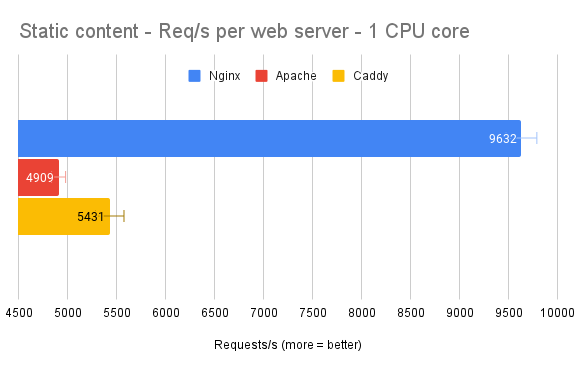

Out of curiosity we also ran some tests on the same system limited to a single core.

Nginx was limited to 1 process through the worker_processes directive.

The same was attempted for Apache in /etc/apache2/mods-enabled/mpm_even.conf but it still seemingly spawned two processes.

We couldn't find a way to force just the one. If you a way to do it don't hesitate to tell us in the comments below.

Caddy always spawns a single process so nothing to do here.

The throughput is somehow close to being halved for Apache and Caddy but not Nginx.

Repeating the experiment seems to always yield the same results. I'm not sure what could be done differently or whether we should take these results seriously or not.

In any cases, ~5000 requests per second is already a pretty good throughput.

Conclusion

Caddy is a wortwhile web server to consider for simple use cases.

Configuration is modern and straightforward with all the features you'd want and support for HTTP/3, which is very hard to find outside of projects using the Go HTTP libraries.