Introduction

Normally you'd configure your web server entirely in its config file hierarchy which is parsed and read when the web server is started (or reloaded using a specific signal).

In that case, the config is read once and is then fixed in memory, unalterable.

As we've seen recently, some web servers like Caddy provide an API for dynamic configuration but this has traditionally been done on the Apache web server using special .htaccess files placed alongside the website files.

Microsoft has a somewhat similar system using web.config files.

Nginx famously doesn't support .htaccess config overrides at all because, as they say on their own website, it's inefficient and Nginx is all about min/maxing throughput.

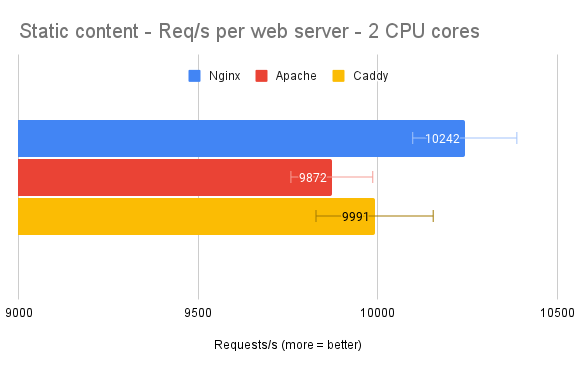

What motivated this article was trying to find reasons to justify using Nginx in front and proxying anything non-static (e.g. PHP) to Apache.

We would assume the htaccess situation to be the only reason because our previous tests of web servers for static content delivery (where .htaccess support was disabled for Apache) showed Nginx only being about 4% ahead of Apache which in our opinion doesn't justify a double web server setup.

Static file delivery is also so fast (provided Apache is in mpm_event mode) that it should be extremely hard to overload on pretty much any web server.

The problem with htaccess overrides

When .htaccess files are allowed through the AllowOverride directive, Apache will look for said files recursively in the entire directory structure below what has been requested up to the website root and load all of these files by applying the upper most one first then each other in order.

For instance, requesting https://test.org/dir1/dir2/dir3/index.html will look for a .htaccess file in dir3, dir2, dir1 and at the website root.

These are all system calls which are pretty fast but they're made for every single request.

After looking for the file, there's the part where the .htaccess file itself gets loaded if one was found, which is a more costly system call loading the whole file into memory, then Apache needs to parse it and apply the configuration override using a hiearchical logic.

All of that is no secret but .htaccess files are still widely used, notably by Wordpress where some modules may even add or remove entries into it dynamically.

That in itself is a mild security concern. We'll however focus on the performance this time around.

Test setup

We're running Apache on Debian 11 with the default options, in mpm_event mode.

Logging has been completely disabled.

The test system is a two-core container running on a host with directly attached SSDs.

That situation is pretty much a best case scenario because containers use their host kernel for system calls providing them filesystem access with no overhead, and VPS never have directly attached storage.

We always pick HTTP tests parameters that represent a very high load but can't result in the web server yielding errors, so we always making sure the web server is keeping up with all the tests. Any result with errors is discarded.

Load testing program

This time around we decided to try using K6 instead of wrk.

It's definitely more modern, very customizable and seems to yield about the same results anyway, even though it seems K6 is mostly using JavaScript.

Test parameters were translated from what we use with wrk:

- Duration: 30 seconds

- VUs (Virtual users): 50

- "Sleep" timer: None

What we mean about "sleep" is that we do not use the sleep instruction present in default K6 scripts, for instance:

export default function() {

http.get(testUrl);

//sleep(1);

}

Test results are an average of at least 10 runs. We use standard deviation as an indication of a possible error rate.

Test methodology

The results presented are averages of multiple runs with a set pause between the runs.

The metric we use is the requests/s figure.

Single line .htaccess

We use the following .htaccess file for "single line" tests:

Options +FollowSymLinks17Kb and 170Kb .htaccess comments only

These are made of two comment lines repeating until we get the desired file size:

# Lorem, ipsum dolor sit amet consectetur adipisicing elit. Iusto, ea

# dolores, commodi ducimus accusamus neque sapiente omnis ad.

17Kb is quite large for a .htaccess file but it's not unheard of, especially when it contains a large amount of comments.

We thought it'd be interesting to see whether these comments have any impact on performance.

On the other hand, 170Kb is completely unrealistic so keep that in mind when these results come up.

17Kb and 170Kb .htaccess Options directives

These files are entirely made of the same two repeating Option directives instead of comments.

Options +FollowSymLinks

Options -IndexesThe same remarks apply here, the 170Kb file is even more unrealistic compared to the one with comments only.

"Realistic" .htaccess

This test file has the base Wordpress redirection rules followed by a large amount of lines meant to block specific IP addresses generated by a Wordpress module.

Here's an example of these lines:

Deny from 172.16.0.111The file size is 12Kb. We call it "realistic" because Wordpress sites kind of often present such .htaccess files (or even bigger ones) at the website root.

Storage

Two different storage setups were used for the tests:

- Locally attached SSD storage, unless mentioned otherwise; A best case scenario for server storage only bested by in-memory storage;

- NFS mount (thus network storage) from a NAS being actively solicited for other operations.

In all cases, the Linux kernel is always allowed to cache files. The test environment had 4GB of dedicated memory and all of the content we test easily fits in that amount.

Results

We noticed variance increases significantly when approaching server capacity for all of the results.

Some were probably overly affected by system load secondary to the test system itself because our test environment wasn't isolated enough. We thought it would be more realistic that way.

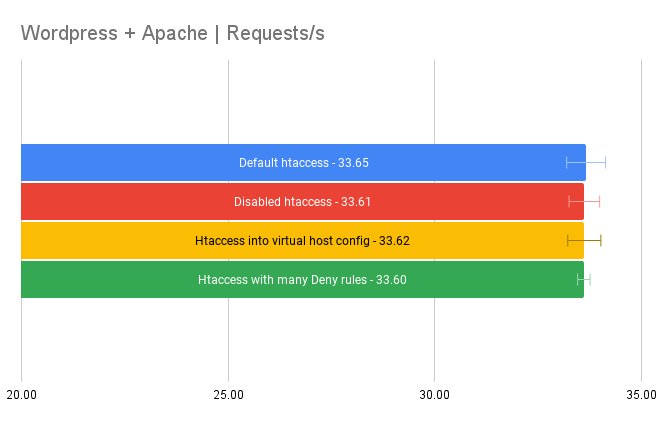

Basic Wordpress site

Our intuition for testing a virgin Wordpress site with default Debian PHP settings would be that the PHP overhead is so large that it would dwarf any possible slowdown created by the presence of the small default Wordpress .htaccess file.

Here's the .htaccess file for the record:

# BEGIN WordPress

# The directives (lines) between "BEGIN WordPress" and "END WordPress" are

# dynamically generated, and should only be modified via WordPress filters.

# Any changes to the directives between these markers will be overwritten.

<IfModule mod_rewrite.c>

RewriteEngine On

RewriteRule .* - [E=HTTP_AUTHORIZATION:%{HTTP:Authorization}]

RewriteBase /

RewriteRule ^index\.php$ - [L]

RewriteCond %{REQUEST_FILENAME} !-f

RewriteCond %{REQUEST_FILENAME} !-d

RewriteRule . /index.php [L]

</IfModule>

# END WordPressWe measured the following test cases:

- Default .htaccess present and enabled with AllowOverride All ;

- Disabled .htaccess using AllowOverride None on the website directory ;

- Disabled .htaccess using AllowOverride None on the website directory but with the .htaccess content integrated into the virtual host config (and thus into the Apache config) ;

- Default .htaccess completed with a lot of "Deny" directives to block IP addresses (some Wordpress plugins can generate such a file) — Its size is about 12 Kb.

The results were as follow, in requests per second:

All these results are basically the same. It looks like PHP is the more serious bottleneck here as we thought.

Although, there were no optimization steps taken whatsoever for PHP or Wordpress. Other PHP apps may have much larger throughput, especially if the home page doesn't necessarily involve connecting to a database.

We'll also see later that static throughput is way into the thousands of requests per seconds so it would take a lot of optimization to get into that territory.

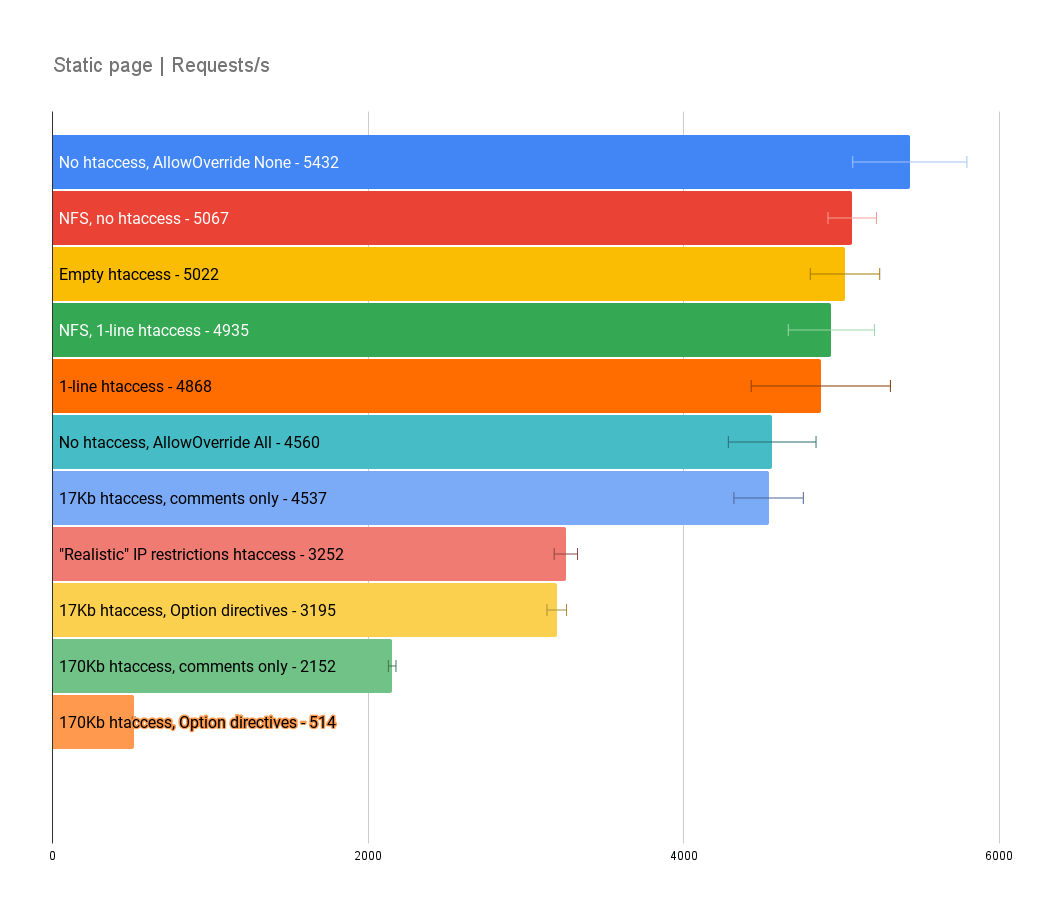

Static page

We went a little overboard with test scenarios at this point.

The best results are on top. However, anything around 5000 requests/s has quite a lot of variance.

The scenario we'd expect to have the most requests/s is indeed on top.

What we could say about the rest of the top of the chart is that just having AllowOverride All has an impact of about 16% on the request throughput.

Whether it's made of a single-line, empty or just absent, on network storage or not, just enabling .htaccess files does have an impact but it takes a significant amount of directives in that file to make the impact much more significant.

For instance, the 17Kb file full of comments has about the same impact as the case with htaccess disabled but AllowOverride set to All.

It makes sense knowing that even if Apache seems to re-open the file for every connection, Linux caches read files (most OSes do that too) with an LRU algorithm, so it's most probably staying in memory and also explains why the network storage doesn't have much impact.

The cache however seems to lose a lot of efficiency when we get to the (albeit unrealistic) 170Kb file full of comments, cutting throughput by 60%.

The "realistic" 12Kb .htaccess with IP restrictions is cutting throughput by 40%, close to the impact of the 17Kb file full of Options directives.

And the 170Kb of repeating Options directive (amounts to more than 8000 Options directives) is tanking performance by a huge 90%.

We're still much faster than the Wordpress test cases but at this point it looks like you can mount an easy somewhat hard to detect denial of service attack by putting in a gigantic .htaccess files with the most costly directives you can find (which may not be Options).

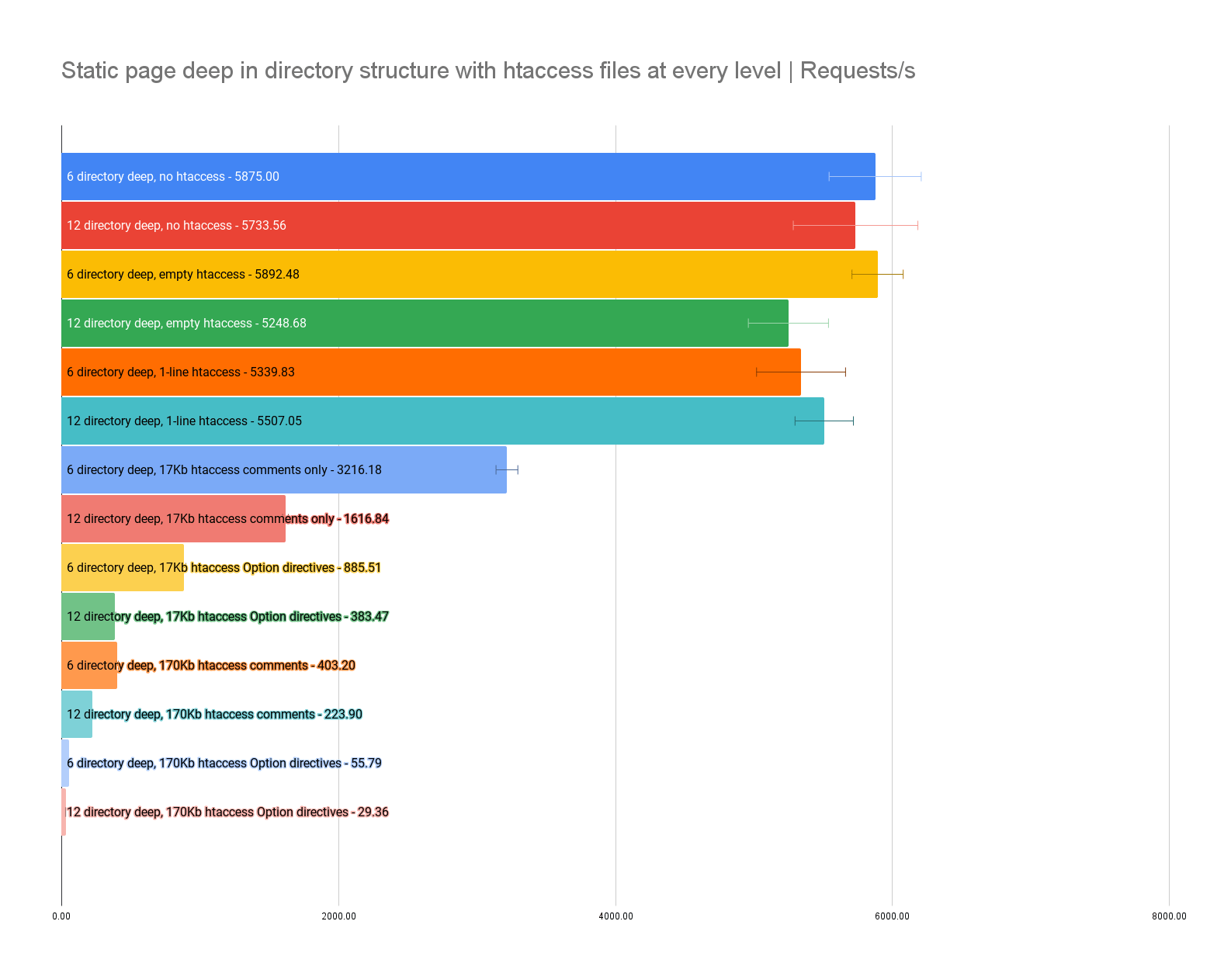

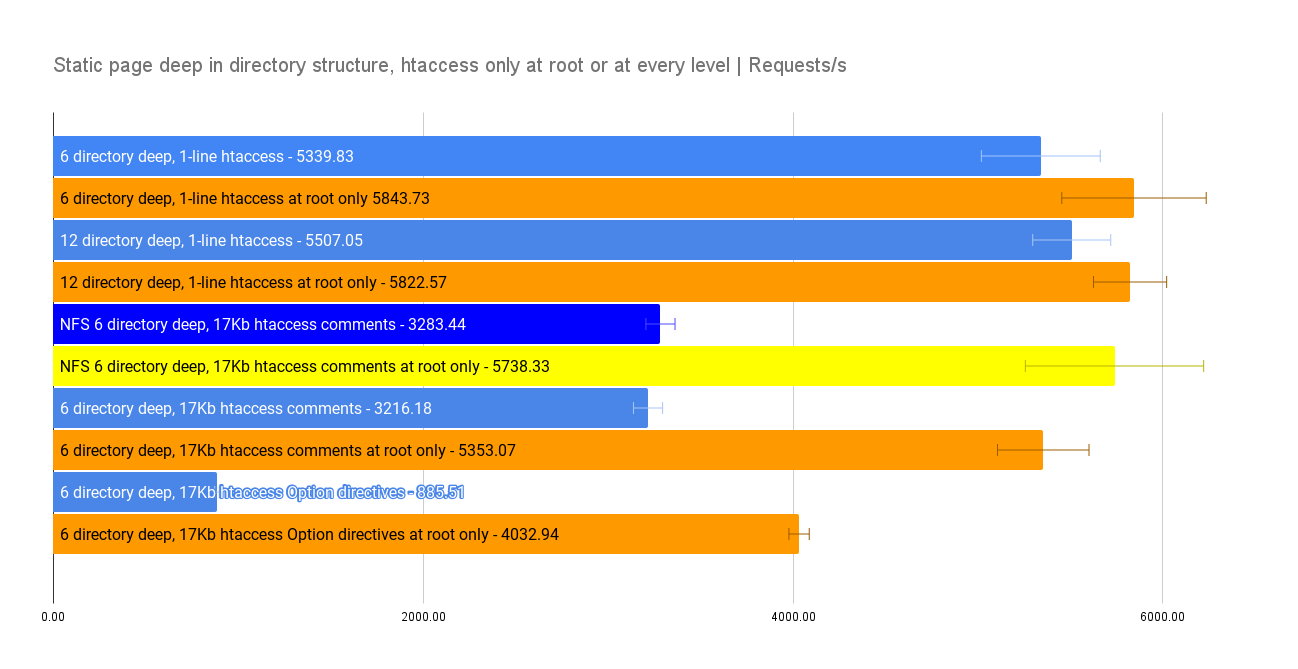

Directory hierarchy, .htaccess files at every level

These are somewhat unrealistic scenarios where we have .htaccess at every level of a directory structure and are requesting a document at a certain depth in it.

For instance:

https://host.local/1/2/3/4/5/6/file.htmlWe were curious as to how that works out since Apache has to look for .htaccess files at every level, load them, and apply them in order.

These results can't be directly compared with the static results from earlier because the requested file is smaller in this case.

The cases with "no htaccess" also mean that AllowOverride was set to None.

The same tests with AllowOverride set to All have the same throughput so we didn't include these results but it seems like the act of just looking for a file at every level doesn't have much if any impact.

The empty and single line .htaccess files had little impact as well, even at 12 directories deep. We're close to the max throughput for these cases and thus the variance is quite high.

This time around though, having to go through 6 17Kb files made entirely of comment lines is having a serious impact, which seems to increase linearly with the directory depth.

The unrealistic 170Kb htaccess files just destroy the throughput completely.

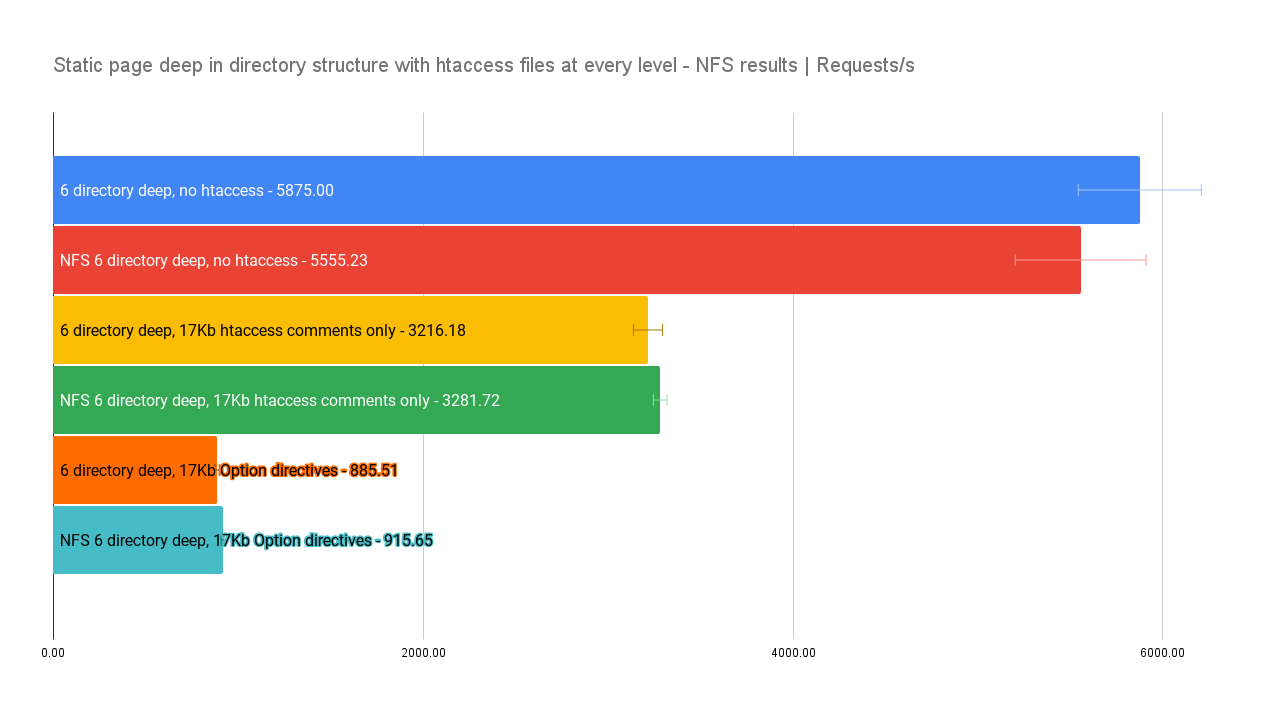

Same thing but on two different storage systems

We're running the same tests as above but comparing directly attached SSD storage (a best case scenario) to NFS.

The results are about the same, even though NFS is a much slower storage option.

As discussed before, it's probably due to the Linux read cache and possibly the NFS client's cache as well.

Directory hierarchy, comparing many VS a single .htaccess at the root

A more realistic scenario is being deep in a directory structure that only has one .htaccess file near or at the root of the host.

With the small single line files at every level we do see a difference approaching 10% compared to just having one file at the root.

Otherwise, the impact increases significantly when a .htaccess has to be read at every level.

Going 6 directories deep with the 17kb file made entirely of comments we're only about 9% slower than not having any .htaccess at all and we're still well into the many thousands of requests per second.

Interestingly, the tests on NFS storage were faster than directly attached SSDs which might be due to extra caching made by the NFS client though the variance was higher than usual with the NFS runs. We'd say the storage options yield about the same results.

Conclusion

It looks like a small, single .htaccess file has little impact on a static site but it does have an impact.

Does it justify using Nginx up front (ideally for all static content requests) and proxying to Apache when there is dynamic content such as PHP or the need of .htaccess overrides?

We'd say not really unless you serve a lot of static content on rather powerful hardware. Otherwise the difference will be minimal and you'll probably be bottlenecked by PHP anyway although it depends on how you use it (we just tested with Wordpress).

It also appeared that the performance of the storage has little to no impact for the tests we made, probably because Linux is caching the files anyway.

All of that being said, the recommendation to put any configuration override you wanted directly in the web server (or virtual host) config still stands strong. It's safer and yields the best performance.